With Wolfgang Engel’s blessing, I’ve added the ShaderX2 books’ (both of them) CD-ROM code samples as zip files and put links in the ShaderX guide. The code is hardly bleeding edge at this point, of course, but code doesn’t rot – there are many bits that are still useful. I’ve also folded in most of the code addenda into the distributions themselves. The only exception at this point is Thomas Rued’s stereographic rendering shaders; in reality, more up-to-date information (and SDK) is available from the company he works with, ColorCode 3-D.

Our book’s figures now downloadable for fair use

A professor contacted us about whether we had digital copies of our figures available for use on her course web pages for students. Well, we certainly should (and our publisher agrees), and would have done this awhile ago if we had thought of it. So, after a few hours of copying and saving with MWSnap, I’ve made an archive of most of the figures in Real-Time Rendering, 3rd edition. It’s a 34 Mb download:

http://www.realtimerendering.com/downloads/RTR3figures.zip

Update: preview and download individual figures on Flickr

Update: figures for the Fourth Edition are here.

This archive should make preparation a lot more pleasant and less time-consuming for instructors, vs. scanning in pages of our book or redrawing figures from scratch. Here’s the top of the README.html file in this archive:

These figures and tables from the book are copyright A.K. Peters Ltd. We have provided these images for use under United States Fair Use doctrine (or similar laws of other countries), e.g., by professors for use in their classes. All figures in the book are not included; only those created by the authors (directly, or by use of free demonstration programs, as listed below) or from public sources (e.g., NASA) are available here. Other images in the book may be reused under Fair Use, but are not part of this collection. It is good practice to acknowledge the sources of any images reused – a link to http://www.realtimerendering.com we suspect would be useful to students, and we have listed relevant primary sources below for citation. If you have questions about reuse, please contact A.K. Peters at service@akpeters.com.

I’ve added a link to this archive at the top of our main page. I should also mention that Tomas’ Powerpoint slidesets for a course he taught based on the second edition of our book are still available for download. The slides are a bit dated in spots, but are a good place to start. If you have made a relevant teaching aid available, please do comment and let others know.

SIGGRAPH Asia 2009 Papers – Micro-Rendering, RenderAnts, and More

A partial papers list has been up on Ke-Sen Huang’s SIGGRAPH Asia 2009 page for a while now, but I was waiting until either the full list was up or an interesting preprint appeared before mentioning it. Well, the latter has happened – A preprint and video are now available for the paper Micro-Rendering for Scalable, Parallel Final Gathering. It shares many authors (including the first) with one of the most interesting papers from last year’s SIGGRAPH Asia conference, Imperfect Shadow Maps for Efficient Computation of Indirect Illumination. Last year’s paper proposed a way to efficiently compute indirect shadowing by rendering a very large number of very low-quality shadowmaps, using a coarse point-based scene representation and some clever hole-filling. This year’s paper extends this occlusion technique to support full global illumination. Some of the same authors were recently responsible for another notable extension of an occlusion method (SSAO in this case) to global illumination.

RenderAnts: Interactive REYES Rendering on GPUs is another notable paper at SIGGRAPH Asia this year; no preprint yet, but a technical report is available. A technical report is also available for another interesting paper, Debugging GPU Stream Programs Through Automatic Dataflow Recording and Visualization.

No preprint or technical report, but promising paper titles: Approximating Subdivision Surfaces with Gregory Patches for Hardware Tessellation and Real-Time Parallel Hashing on the GPU.

Looking at this list and last year’s accepted papers, SIGGRAPH Asia seems to be more accepting of real-time rendering papers than the main SIGGRAPH conference. Combined with the strong courses program, it’s shaping up to be a very good conference this year.

Fundamentals of Computer Graphics, 3rd Edition

One bit of deja vu for me at SIGGRAPH this year was another book signing at the A K Peters booth. Last year’s SIGGRAPH had the signing for Real-Time Rendering; this year I was at the book signing for the third edition of Fundamentals of Computer Graphics. My presence at the signing was due to the fact that I wrote a chapter on graphics for games (this edition also has new chapters on implicit modeling, color, and visualization, as well as updates to the existing chapters). As in the case of Real-Time Rendering, I was interested in contributing to this book as a fan of the previous editions. Fundamentals is targeted as a “first graphics book” so it has a slightly different audience than Real-Time Rendering, which is meant to be the reader’s second book on the subject.

One bit of deja vu for me at SIGGRAPH this year was another book signing at the A K Peters booth. Last year’s SIGGRAPH had the signing for Real-Time Rendering; this year I was at the book signing for the third edition of Fundamentals of Computer Graphics. My presence at the signing was due to the fact that I wrote a chapter on graphics for games (this edition also has new chapters on implicit modeling, color, and visualization, as well as updates to the existing chapters). As in the case of Real-Time Rendering, I was interested in contributing to this book as a fan of the previous editions. Fundamentals is targeted as a “first graphics book” so it has a slightly different audience than Real-Time Rendering, which is meant to be the reader’s second book on the subject.

At the A K Peters booth I also got to try out the Kindle edition of Fundamentals (the illustrations in Real-Time Rendering rely on color to convey information, so a Kindle edition will have to wait for color devices). I haven’t jumped on the Kindle bandwagon personally (the DRM bothers me; when I buy something I like to own it), but I know people who are quite pleased with their Kindle (or iPhone Kindle application).

HPG 2009 Report

I got to attend HPG this year, which was a fun experience. At smaller, more focused conferences like EGSR and HPG you can actually meet all the other attendees. The papers are also more likely to be relevant than at SIGGRAPH, where the subject matter of the papers has become so broad that they rarely seem to relate to graphics at all.

I’ve written about the HGP 2009 papers twice before, but six of the papers lacked preprints and so it was hard to judge their relevance. With the proceedings, I can take a closer look. The “Configurable Filtering Unit” paper is now available on Ke-Sen Huang’s webpage, and the rest are available at the ACM digital library. The presentation slides for most of the papers (including three of these six) are available at the conference program webpage.

A Directionally Adaptive Edge Anti-Aliasing Filter – This paper describes an improved MSAA mode AMD has implemented in their drivers. It does not require changing how the samples are generated, only how they are resolved into final pixel colors; this technique can be implemented on any system (such as DX10.1-class PCs, or certain consoles) where shaders can access individual samples. In a nutshell, the technique inspects samples in adjacent pixels to more accurately compute edge location and orientation.

Image Space Gathering – This paper from NVIDIA describes a technique where sharp shadows and reflections are rendered into offscreen buffers, upon which an edge-aware blur operation (similar to a cross bilateral filter) is used to simulate soft shadows and glossy reflections. The paper was targeted for ray-tracing applications, but the soft shadow technique would work well with game rasterization engines (the glossy reflection technique doesn’t make sense for the texture-based reflections used in game engines, since MIP-mapping the reflection textures is faster and more accurate).

Scaling of 3D Game Engine Workloads on Modern Multi-GPU Systems – systems with multiple GPUs used to be extremely rare, but they are becoming more common (mostly in the form of multi-GPU cards rather than multi-card systems). This paper appears to do a through analysis of the scaling of game workloads on these systems, but the workloads used are unfortunately pretty old (the newest game analyzed was released in 2006).

Bucket Depth Peeling – I’m not a big fan of depth peeling systems, since they invest massive resources (rendering the scene multiple times) to solve a problem which is pretty marginal (order-independent transparency). This paper solves the multi-pass issue, but is profligate with a different resource – bandwidth. It uses extremely fat frame buffers (128 bytes per pixel).

CFU: Multi-purpose Configurable Filtering Unit for Mobile Multimedia Applications on Graphics Hardware – This paper proposes that hardware manufacturers (and API owners) add a set of extensions to fixed-function texture hardware. The extensions are quite useful, and enable accelerating a variety of applications significantly (around 2X). Seems like a good idea to me, but Microsoft/NVIDIA/AMD/etc. may be harder to convince…

Embedded Function Composition – The first two authors on this paper are Turner Whitted (inventor of hierarchical ray tracing) and Jim Kajiya (who defined the rendering equation). So what are they up to nowadays? They describe a hardware system where configurable hardware for 2D image operations is embedded in the display device, after the frame buffer output. The system is targeted to applications such as font and 2D overlays. The method in which operations are defined is quite interesting, resembling FPGA configuration more than shader programming.

Besides the papers, HPG also had two excellent keynotes. I missed Tim Sweeney’s keynote (the slides are available here), but I was able to see Larry Gritz’s keynote. The slides for Larry’s keynote (on high-performance rendering for film) are also available, but are a bit sparse, so I will summarize the important points.

Larry started by discussing the differences between film and game rendering. Perhaps the most obvious one is that games have fixed performance requirements, and quality is negotiable; film has fixed quality requirements, and performance is negotiable. However, there are also less obvious differences. Film shots are quite short – about 100-200 frames at most; this means that any precomputation, loading or overhead must be very fast since it is amortized over so few frames (it is rare that any precomputation or overhead from one shot can be shared with another). Game levels last for many tens of thousands of frames, so loading time is amortized more effiiciently. More importantly, those frames are multiplied by hundreds of thousands of users, so precomputation can be quite extensive and still pay off. Larry makes the point that comparing the 5-10 hours/frame which is typical of film rendering with the game frame rate (60 or 30 fps) is misleading; a fair comparison would include game scene loading times, tool precomputations, etc. The important bottleneck for film rendering (equivalent to frame rate for games) is artist time.

Larry also discussed why film rendering doesn’t use GPUs; the data for a single frame doesn’t fit in video memory, rooms full of CPU blades are very efficient (in terms of both Watts and dollars), and the programming models for GPUs have yet to stabilize. Larry then discussed the reasons that, in his opinion, ray tracing is better suited for film rendering than the REYES algorithm used in Pixar’s Renderman. As background, it should be noted that Larry presides over Sony Pictures Imageworks’ implementation of the Arnold ray tracing renderer which they are using to replace Renderman. An argument for replacing Renderman with a full ray-tracing renderer is especially notable coming from Larry Gritz; Larry was the lead architect of Renderman for some time, and has written one of the more important books popularizing it. Larry’s main points are that REYES has inherent inefficiencies, it is harder to parallelize, effects such as shadows and reflections require a hodgepodge of effects, and once global illumination is included (now common in Renderman projects) most of REYES inherent advantages go away. After switching to ray-tracing, SPI found that they need to render fewer passes, lighting is simpler, the code is more straightforward, and the artists are more productive. The main downside is that displacing geometric detail is no longer “free” as it was with REYES.

Finally, Larry discussed why current approaches to shader programming do not work that well with ray tracing; they have developed a new shading language which works better. Interestingly, SPI is making this available under an open-source license; details on this and other SPI open-source projects can be found here.

I had a chance to chat with Larry after the keynote, so I asked him about hybrid approaches that use rasterization for primary visibility, and ray-tracing for shadows, reflections, etc. He said such approaches have several drawbacks for film production. Having two different representations of the scene introduces the risk of precision issues and mismatches, rays originating under the geometry, etc. Renderers such as REYES shade on vertices, and corners and crevices are particularly bad as ray origins. Having to maintain what are essentially two seperate codebases is another issue. Finally, once you use GI then the primary intersections are a relatively minor part of the overall frame rendering time, so it’s not worth the hassle.

In summary, HPG was a great conference, well worth attending. Next year it will be co-located with EGSR. The combination of both conferences will make attendance very attractive, especially for people who are relatively close (both conferences will take place in Saarbrucken, Germany). In 2011, HPG will again be co-located with SIGGRAPH.

HPG and SIGGRAPH: pix and links

Some seven links to keep you busy while we digest HPG and SIGGRAPH:

- Pictures of HPG and SIGGRAPH – even though just about everyone at these conferences has a camera in some form on them, we just about never take pictures. I decided to try to photograph anyone I recognized this year.

- Tim Sweeney’s HPG keynote slides – I didn’t attend the keynote, unfortunately, but heard about it. Main takeaway for me is that programming these highly parallel machines is hard, and the more that IHVs can do to ease the burden and remove limitations the more successful they will be.

- While waiting for our HPG reports, read Steve Worley’s.

- The course notes for “Advances in Real-Time Rendering in 3D Graphics and Games” will be up in a few weeks, if not sooner. Crytek’s presentation is available at their website.

- The “Beyond Programmable Shading” course notes are available now. I particularly liked Johan Andersson’s talk, partially for the sheer complexity of it all. The various factors that affect making a game engine fast are a bit mind-boggling.

- The place to go for interactive ray tracing development information is the ompf.org forum.

- This was the first year ever that I didn’t attend the Electronic Theater. Well, I did attend the first half-hour (live real-time demos), but then found myself looking at my watch as colorful but meaningless things occurred on the screen. I think the fact that we could attend the E.T. without needing a ticket meant that I could keep putting it off and also wouldn’t feel I lost anything if I missed it. If SIGGRAPH had issued me a ticket for a specific night, I suspect I would have willingly stayed for all of it, not wanting to lose the value of the ticket. Psychology. All that said, the best colorful but meaningless real-time demo I saw was “DT4 Identity SA“, freeware which runs on a Mac and is quite charming.

HPG 2009 – a closer look

When discussing things to do and see at SIGGRAPH, it is important to note the co-located conferences. This year, SIGGRAPH is co-located with the Eurographics Symposium on Sketch-Based Interfaces and Modeling (SBIM), the Symposium on Computer Animation (SCA), Non-Photorealistic Animation and Rendering (NPAR), and High-Performance Graphics (HPG). SCA has had good animation papers over the years, and is of interest to many game graphics programmers. NPAR is also a good conference for anyone interested in stylized rendering. In this post I will focus on HPG, which is a new conference formed out of the merger of the venerable Graphics Hardware conference, and the newcomer Symposium on Interactive Ray Tracing.

HPG is a three-day conference; the first two days are just before SIGGRAPH, and the third overlaps the first day of SIGGRAPH (unfortunately conflicting with the excellent SIGGRAPH course, Advances in Real-Time Rendering in 3D Graphics and Games).

HPG has managed to line up two pretty amazing keynotes. The first one is by Larry Gritz on film production rendering. Larry is a legend in the field; he was with Pixar from the Toy Story days, and co-wrote one of the most well-regarded books on Renderman. He since worked on several important renderers (BMRT, Gelato), and is now at Sony Pictures Imageworks. The second keynote is by Tim Sweeney, on the future of GPUs. As the outspoken chief architect of Epic’s Unreal Engine, Tim should need no introduction.

At the end of the conference, the two keynote speakers are joined by Steve Parker (NVIDIA) and Aaron Lefohn (Intel) for a panel on high-performance graphics in 7 year’s time.

HPG also has posters and “Hot 3D” systems presentations (hardware manufacturers talking about their latest designs). Inexplicably, although the acceptance deadline for both has long since passed, the content of neither of these is listed on the conference website yet.

I briefly discussed HPG papers in a previous post, but then only paper titles were available, making it hard to judge relevance; now many of the papers have preprints linked from Ke-Sen Huang‘s HPG 2009 papers page.

Some of the papers look relevant to current or near-future work. There are two interesting antialiasing papers: Morphological Antialiasing was covered by Eric in a recent post. The other antialiasing paper (A Directionally Adaptive Edge Anti-Aliasing Filter) does not have a preprint, but the title is promising. It is notable that one of the authors on this paper (Jason Yang) is listed as a speaker at the SIGGRAPH Advances in Real-Time Rendering in 3D Graphics and Games course; perhaps he will discuss the paper there. Although the NVIDIA paper Image Space Gathering has no preprint (yet), some information on this technique was disclosed at GDC: it involves rendering reflections and shadows into 2D buffers and then performing cross bilateral filters to mimic glossy reflections and soft shadows. I have seen similar techniques used in games, so it will be interesting to hear NVIDIA’s take on this concept. Another promising paper title: Scaling of 3D Game Engine Workloads on Modern Multi-GPU Systems.

The paper Parallel View-Dependent Tessellation of Catmull-Clark Subdivision Surfaces deals with tessellation using GPGPU methods rather than the DX11 tessellation pipeline; I’m not an expert in this area so it’s hard for me to judge, but it might be of interest for people working in this field.

I’m a bit skeptical of depth peeling techniques in general, but recent work in this area has shown some promise. The paper Bucket Depth Peeling lacks a preprint at this moment, but I look forward to learning more about it at the conference.

I found the title Data-Parallel Rasterization of Micropolygons With Defocus and Motion Blur promising because I am interested in the REYES micropolygon algorithm, and particularly in the way it handles defocus and motion blur effects. The technique presented in this paper appears to be less efficient than the REYES method, except for cases with very high velocity and/or defocus. The paper presents a GPU-efficient version of the REYES algorithm as well as an alternative algorithm which is faster in some cases. One of the authors has a blog post that gives some interesting context for the paper.

The amount of actual graphics hardware papers at the Graphics Hardware conference has been declining for years, which is probably one of the factors that precipitated the conference merger with IRT. This year there is only one paper which is clearly about hardware design: PFU: Programmable Filtering Unit for Mobile Multimedia Applications on Graphics Hardware. It has a fairly self-explanatory title, which is fortunate since it has no preprint available. Texture filtering is the last unassailable bastion of fixed-function hardware; for example, it is the only fixed-function unit in Larrabee. Programmable filtering is an intriguing concept; I look forward to the paper presentation. There is one more paper that may be about hardware (Embedded Function Composition); but the title is a bit opaque and it also has no preprint, so it is hard to be sure.

Despite my claim in the previous blog post, there do indeed appear to be quite a few papers about ray tracing this year: Efficient Ray Traced Soft Shadows using Multi-Frusta Traversal, Understanding the Efficiency of Ray Traversal on GPUs, Faster Incoherent Rays: Multi-BVH Ray Stream Tracing, Accelerating Monte Carlo Shadows Using Volumetric Occluders and Modified kd-Tree Traversal, Selective and Adaptive Supersampling for Real-Time Ray Tracing, Spatial Splits in Bounding Volume Hierarchies, Object Partitioning Considered Harmful: Space Subdivision for BVHs, and A Parallel Algorithm for Construction of Uniform Grids. Another paper, Hardware-Accelerated Global Illumination by Image Space Photon Mapping, combines image-space, GPU-accelerated methods for the initial bounce and final gather with ray-tracing for a complete photon mapping solution.

There are only three “GPGPU” papers this year; two on GPU stream compaction (copying selected elements of an array into a smaller array): Efficient Stream Compaction on Wide SIMD Many-Core Architectures and Stream Compaction for Deferred Shading, and one on computing minimum spanning trees for graphs (Fast Minimum Spanning Tree for Large Graphs on the GPU).

Morphological Antialiasing

An Intel research group has put their papers and code up for download. I had asked Alexander Reshetov about his morphological antialiasing scheme (MLAA), as it sounded interesting – it was! He generously sent a preprint, answered my many questions, and even provided source code for a demo of the method. What I find most interesting about the algorithm is that it is entirely a post-process. Given an image full of jagged edges, it searches for such edges and blends these accordingly. There are limits to such reconstruction, of course, but the idea is fascinating and most of the time the resulting image looks much better. Anyway, read the paper.

As an example, I took a public domain image from the web, converted it to a bitonal image so it would be jaggy, then applied MLAA to see how the reconstruction looked. The method works on full color images (though has to deal with more challenges when detecting edges). I’m showing a black and white version so that the effect is obvious. So, here’s a zoom in of the jaggy version:

And here are the two smoothed versions:

Which is which? It’s actually pretty easy to figure: the original, on the left, has some JPEG artifacts around the edges; the MLAA version, to the right, doesn’t, since it was derived from the “clean” bitonal image. All in all, they both look good.

Here’s the original image, unzoomed:

The MLAA version:

For comparison, here’s a 3×3 Gaussian blur of the jaggy image; blurring helps smooth edges (at a loss of overall crispness), but does not get rid of jaggies. Note the horizontal vines in particular show poor quality:

Here’s the jaggy version derived from the original, before applying MLAA or the blur:

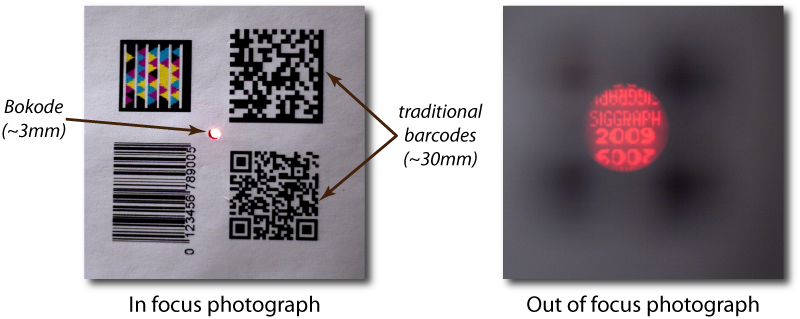

Bokode – clever!

Not directly relevant to real-time rendering (although there might be some tangentially related applications in areas like augmented reality), this SIGGRAPH 2009 paper is just painfully clever. It exploits the phenomenon of bokeh (the large circular blobs that small intense light sources generate in out-of-focus images) to create tiny barcodes that can be seen from a distance by cameras. They put a lenslet over the barcode, so that when viewed in a defocused manner you see a large circular blob – with a sharp image of the barcode in the center!

7 Things for July 26th

- Books, books, and more books: I received review copies of two books. Best of Game Programming Gems is as it sounds, certainly cheaper than buying the seven books in this series, no real review needed (look inside). Game Engine Architecture is about just that, how to make a professional-grade game rendering system, from soup to nuts (you can look inside). Eberly’s two books are the previous notable works in this area, but are quite different than this new volume. While they focus almost exclusively on algorithms, this book attempts to cover the whole task of developing an engine: what to use for source control, dealing with memory management and in-game profiling, input devices, SIMD, and many other practical topics. There is also algorithmic coverage of rendering, animation, collision detection and physics, among other areas. Naturally, the amount of information on each area is limited by page count (the book’s a solid 860 pages), but in my brief skim it looks like most of the critical areas and concepts are touched on. You won’t become an expert in any one area from this volume, but it looks like you’ll have some reasonably deep understanding of the elements that go into making a game engine. Quite an impressive work, and I know of nothing else in this area that is so detailed. I hope I get a chance to read it (who am I fooling? Though I do wish I had the time…) – well, at the least, it’s a place I’ll first go if I want to learn about a topic in game development that I know little about. If you’d rather wax nostalgic about great game engines you have known, as well as what the state of the are is, this article is for you (oh, yeah, the author of this new book works at the company that made #3).

- Looking around for titles I’d like to look over at SIGGRAPH, I found these: Game Graphics Programming, Programming the Cell Processor: For Games, Graphics, and Computation, Introduction to 3D Game Programming with DirectX 10, Ultimate Game Programming with DirectX, 2nd Ed., Advanced Game Programming, Game Coding Complete. Which all sort of sound the same (except for the Cell book), but I’d be happy to page through each and see if it looks promising.

- There’s a worthwhile comparison of average vertex normal computation methods on the MeshLab blog. He gives the nod to Thürmer and Wüthrich’s method. You can try each of the three using MeshLab itself.

- Sure, Spore didn’t light the world on fire as many of us hoped, but a lot of cool technology was explored. Chris Hecker has a worthwhile rundown of some of the great stuff they worked on.

- There are some surprisingly affordable 3D stereolithography objects available on Shapeways. I bought Spiral Cage (tiny, but impressive, and so cheap), Clematis (looks delicate, but is quite springy), and Gyroid (pricier, but more sizeable and a fun form). It’s great to see so many people exploring such areas; here’s a detailed summary of resources. Even if you never plan on getting involved, the Flickr area dedicated to such techniques is worth a browse.

- This one amused me: a cloud computing company had a contest that was meant to show off Ruby and cloud computing strengths. It was won by people brute-forcing the problem with GPUs: 16 used by the first-place winner (plus 117 CPU cores, which had less performance total than the 16 GPUs), 4 by the second. Steve Worley and others talk about the GPU approach on the CUDA forum (his program, shared with the community there, was used to win second place).

- I admire the dedication.